GameRx

Your Digital Dose. Prescribed by Play.

Fast Facts for Busy Reviewers

Goal:

Build a human-centered framework that uses player-written Steam reviews to understand emotional experiences in games and explore whether titles can be matched to emotional relief needs.

Tools Used:

Python • Jupyter Notebook • NLP (NRCLex) • Scikit-learn • Streamlit

Key Wins:

🎮 Translated millions of player reviews into structured emotional signals using NLP

🧠 Defined and operationalized four emotional relief pathways: Comfort, Catharsis, Distraction, Validation

📊 Revealed that emotional play styles cut across traditional genre boundaries

🔍 Built a hybrid model that prioritizes player emotion while using genre as explainable context

💡 Produced an app-ready dataset powering mood-based game recommendations

Strategic Takeaways:

Players already use games as emotional tools, but game discovery is largely driven by popularity, trends, or promotions

“Negative” emotions don’t always signal a bad experience, they often reflect intentional play

Hybrid modeling improves coverage, interpretability, and safety across the dataset

Games can help shift emotions, not by erasing them, but by guiding them toward relief

From Analysis to Application:

GitHub: Documents the full analytical pipeline

Streamlit: Translates the analysis into an interactive experience

Explore the Code Behind the Insights

Interactive app. Best viewed on desktop.

The Challenge

The idea for GameRx came from a simple moment: choosing a game during a stressful period. Without thinking about it, I realized I wasn’t just picking something to play, I was trying to shift how I was feeling.

That raised a larger question.

What if other people are making similar choices, turning to games to cope, reset, or regain a sense of control, but without any guidance on what to play based on what they need emotionally?

This led me to explore whether emotional experiences described in player reviews could be measured at scale and used to better understand how games support emotional relief.

Key questions:

Can player-written reviews reliably capture emotional experiences?

Do different types of games support different forms of emotional relief?

Can difficult emotions like stress, anger, or sadness reflect intentional play?

Is emotional relief a more useful lens for understanding games than labels alone?

My goal was not to predict preferences, but to explore whether games could be understood and grouped by emotional relief.

Problem

Players often choose games based on how they want to feel, but game discovery is largely driven by what’s popular, trending, or promoted. Emotional context is rarely part of the decision process, leaving people to rely on trial and error when seeking relief.

Tools Used

To explore trends and structure the analysis, I used:

Python

Jupyter Notebook

NLP (NRCLex)

Pandas and NumPy

Scikit-learn

Matplotlib and Seaborn

Key Insights

Emotional signals in player reviews are consistent and measurable at scale.

Games frequently support emotional relief in ways that don’t align cleanly with genre labels.

“Negative” emotions often reflect intentional play experiences rather than dissatisfaction.

Emotional play styles span multiple genres and design types.

From Insights to Impact

Help players choose games based on how they want or need to feel.

Surface games that support comfort, release, focus, or validation beyond popularity.

Translate player review language into meaningful emotional signals at scale.

Show how emotional context can complement genre and design information.

Data Cleaning Highlights

Before building any models or insights, I focused on making the GameRx dataset reliable, consistent, and safe to use for emotional analysis. Because this project relies on player-written reviews at scale, cleaning wasn’t just technical, it directly shaped what kinds of emotional signals could be trusted.

Here’s what I did:

🎮 Standardized game and metadata fields

Cleaned and normalized game names, genres, platforms, and publishers to avoid duplicate entries and inconsistent grouping across sources.

🎮 Cleaned and structured review text

Removed nulls, empty reviews, and malformed entries. Consolidated review text into a single analysis-ready field to support NLP processing.

🎮 Prepared emotion features from raw text

Processed millions of player reviews using an emotion lexicon (NRCLex), generating consistent emotion scores per game while handling sparse or noisy language.

🎮 Filtered unsafe or irrelevant content

Identified and flagged content that didn’t belong in a wellbeing-focused recommendation system, ensuring the dataset aligned with the project’s intent.

🎮 Resolved duplicates and edge cases

Handled duplicated games, mismatched AppIDs, and inconsistent review counts so each game represented a single, coherent emotional profile.

🎮 Created app-ready and model-ready versions

Produced clean, versioned datasets for clustering, evaluation, and the Streamlit app, keeping raw data intact while enforcing a single source of truth downstream.

💡 What This Helped With

Enabled stable emotion clustering and pathway modeling

Prevented emotional signals from being skewed by noise or duplicates

Made emotional comparisons meaningful across thousands of games

Ensured the recommendation logic reflected player experience, not data artifacts

Full notebooks and code available on GitHub

Analytical Build

Once the GameRx data was clean and trustworthy, I moved into the analysis phase. At this point, my goal wasn’t just to find patterns, it was to understand how players emotionally experience games and whether those experiences show up consistently across thousands of titles.

To do that, I combined simple exploration, visual checks, and clustering to see how games naturally group based on emotional signals from player reviews.

🎮 Preparing Emotional Signals

I started by turning player-written reviews into usable emotional data.

Using an emotion lexicon (NRCLex), I converted review language into emotion scores for each game. These scores were then aggregated so every game had a clear emotional profile, regardless of how many reviews it had.

I was careful here. Games with fewer reviews weren’t allowed to look artificially extreme, and noisy or inconsistent signals were handled so they didn’t distort the results.

This step mattered because everything downstream depended on these emotion signals being stable and comparable.

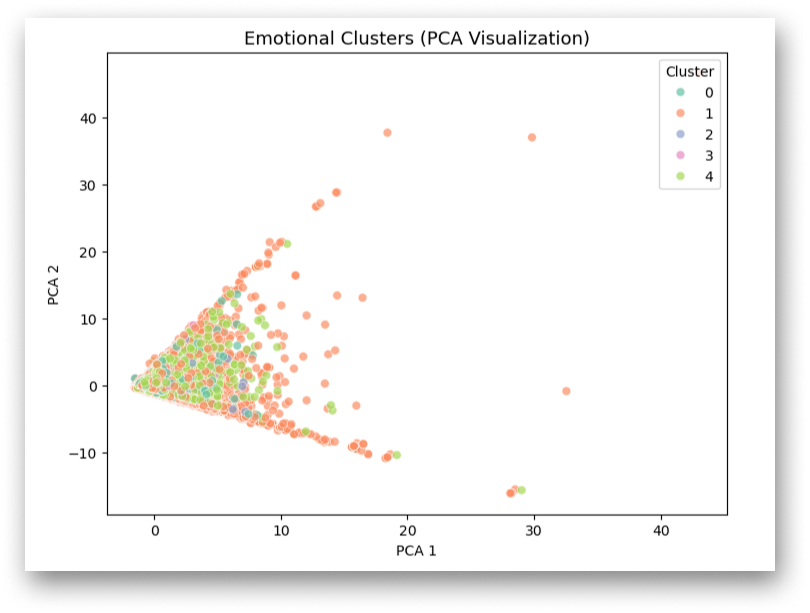

🎮 Exploring Emotional Groupings

Next, I wanted to see whether games naturally grouped together based on how they made players feel, not how they were labeled.

I used clustering to explore patterns across the emotional profiles. When I looked at the results, clear groupings began to appear. Some games consistently supported comfort, others leaned toward release or intensity, and some helped players disengage and focus.

What stood out was that these groupings didn’t map cleanly to genre. Games that looked very different on the surface often served similar emotional roles.

Key Insight:

Genre tells you what a game is. Emotional patterns tell you what a game does for the player.

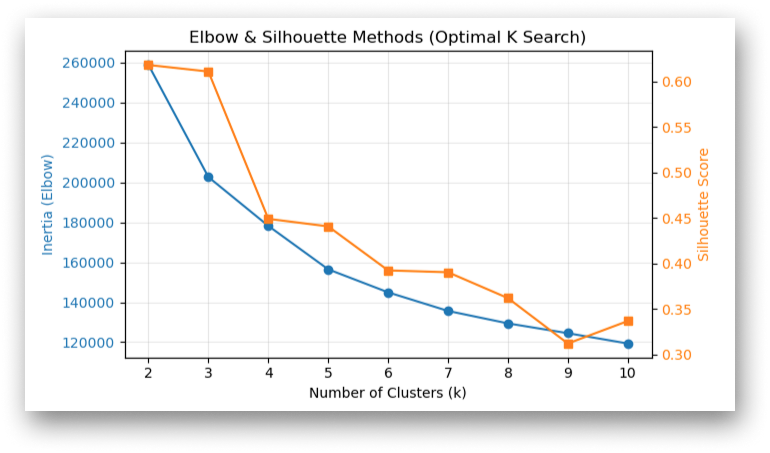

🎮 Deciding How Much Structure Was Enough

Rather than forcing the data into too many categories, I tested different levels of grouping to find a balance between clarity and usefulness.

Too few groups flattened meaningful differences. Too many made the results hard to explain and impractical for recommendations.

I chose a structure that preserved real emotional differences while still being easy to interpret and apply later in the app.

Key Insight:

The best model wasn’t the most complex one. It was the one that stayed understandable.

🎮 Sanity Checks and Validation 🎮

Before trusting the results, I reviewed actual games inside each group.

I checked emotion distributions, read sample reviews, and looked at how genres overlapped within clusters. This helped confirm that the groupings made sense in real-world terms and reflected intentional player experiences.

This step ensured the analysis stayed grounded in player behavior, not just math.

Key Visuals & What They Show

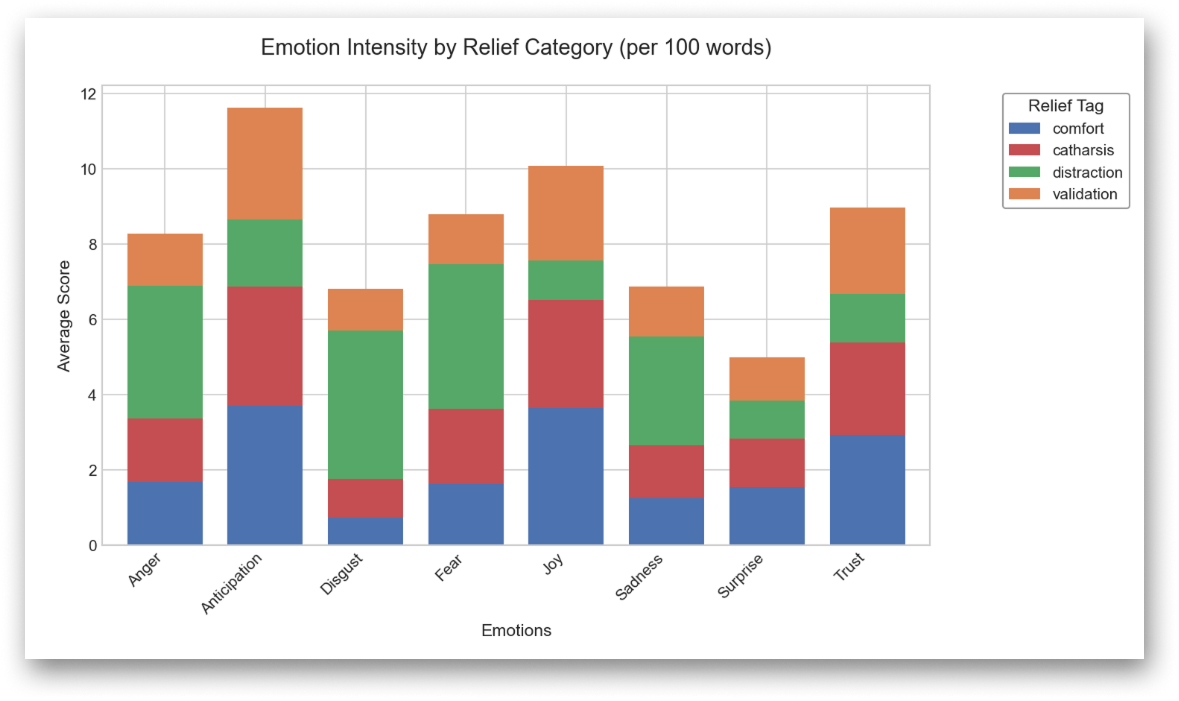

🎮 Emotion Intensity by Relief Pathway

This stacked bar chart shows how different emotions appear across each relief pathway (Comfort, Catharsis, Distraction, Validation), based on player-written reviews.

Instead of treating emotions as good or bad, this visual shows how they show up in context. Comfort leans toward calm and safety, Catharsis carries intensity and release, Distraction pulls attention away, and Validation reflects recognition and meaning.

What it shows:

Different types of games support different emotional needs, and those differences are measurable in how players talk about their experiences.

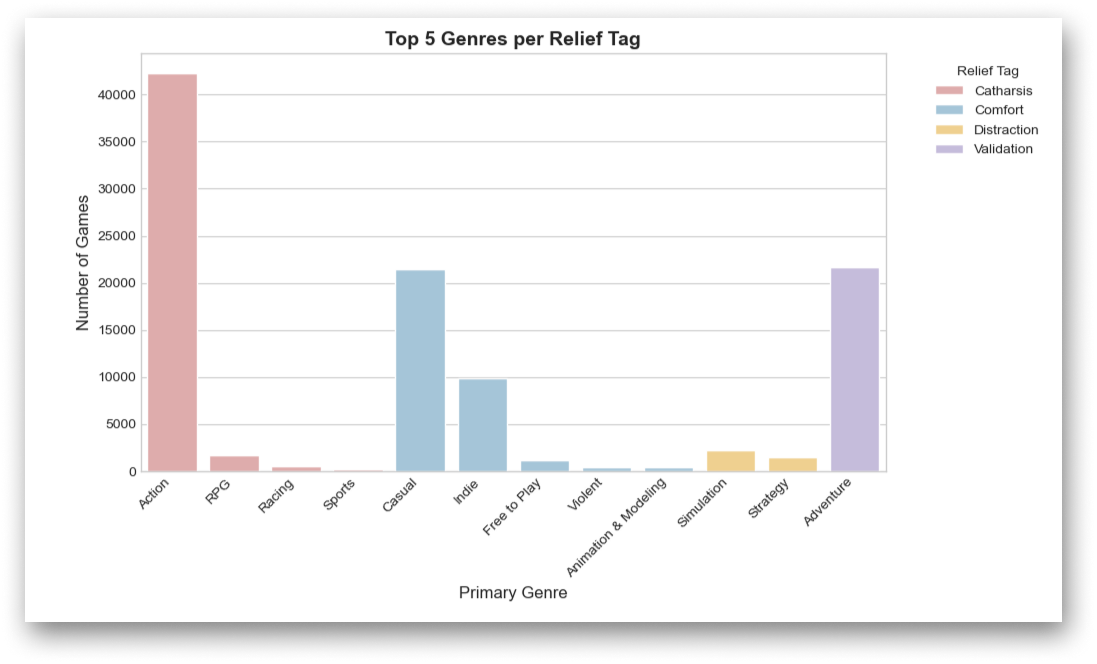

🎮 Genres Don’t Equal Feelings

This grouped bar chart compares the top genres associated with each relief pathway.

At first glance, you might expect genres to map cleanly to emotions. They don’t. The same genre can support very different emotional experiences depending on design, pacing, and player intent.

What it shows:

Genre alone doesn’t explain how a game feels. Emotional relief cuts across genres in ways traditional labels don’t capture, which is why emotional signals from player reviews are essential for understanding how games actually support different emotional needs.

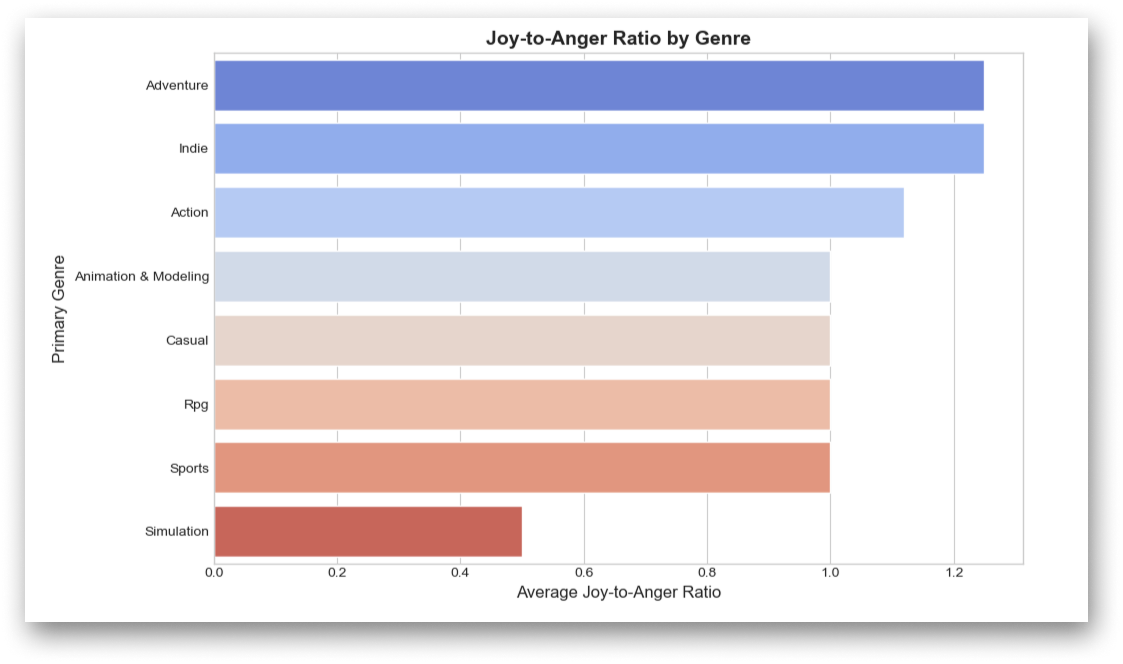

🎮 Emotional Balance Across Play Styles

This visual compares how joy and anger tend to show up across different game genres, based on player-written reviews.

Instead of treating all engagement the same, it highlights whether certain play styles lean toward emotional release or emotional balance.

What it shows:

Some genres consistently support grounding or comfort, while others lean into intensity and catharsis. This helped clarify why different games serve different emotional purposes, even when they appear similar on the surface.

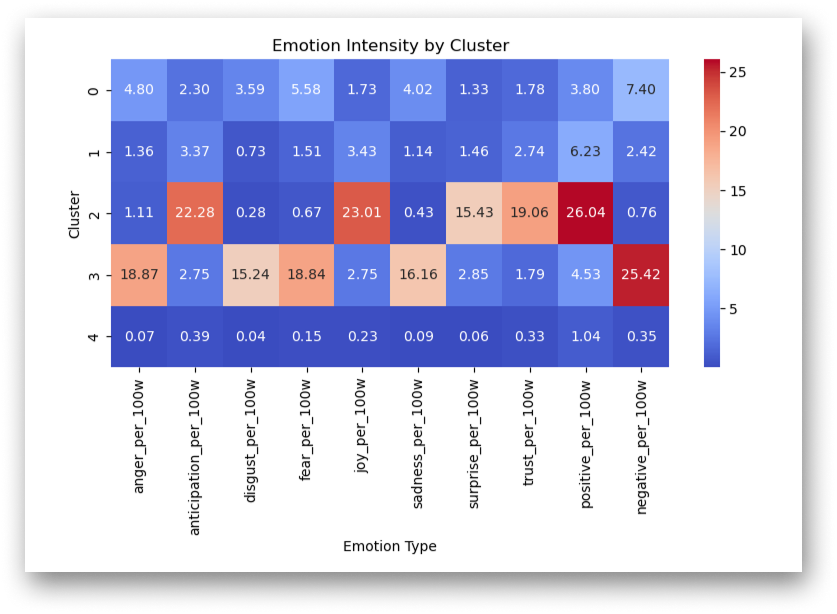

🎮 What Emotional Groupings Look Like

This heatmap shows the emotional makeup of each group of games identified during analysis.

Instead of organizing games by genre or popularity, this view highlights how different groups consistently evoke different emotional patterns based on player language.

What it shows:

Each group has a distinct emotional signature. Some are shaped by intensity and emotional release, while others reflect calm, safety, or balance. These patterns helped translate raw analysis into meaningful emotional play styles later used in the recommendation system.

Main Insights (Recap)

🎮 Feelings Come Before Genres

Players don’t choose games just by genre. They choose them based on how they want to feel. The data showed that emotional relief cuts across traditional genre labels. The same genre can comfort one player and release tension for another, depending on how the game is designed and experienced.

❯❯❯❯ It’s not just what you play. It’s why you play.

🎮 Emotional Patterns Are Consistent at Scale

When thousands of reviews were analyzed together, clear emotional groupings emerged on their own. These patterns weren’t forced or pre-labeled. They showed that emotional play styles are repeatable, stable, and measurable across very different games.

❯❯❯❯ When players speak at scale, patterns start to form.

🎮 Emotional Context Changes Discovery

By translating player language into emotional signals, GameRx reframes how games can be discovered. Instead of relying on what’s popular or promoted, players can explore games based on comfort, release, focus, or validation, meeting emotional needs more intentionally.

❯❯❯❯ Choosing what to play can change how you feel.

From Data to Decisions

This project wasn’t about predicting what games people would like.

It came from a quieter realization: when choosing a game during a period of stress and uncertainty, the decision wasn’t really about entertainment. It was about how I wanted to feel next.

GameRx was built to explore that idea at scale. By using player-written Steam reviews, this project asks whether emotional experiences in games can be measured, understood, and responsibly used to support emotional relief through play.

Key Decisions Informed by the Data

01. Emotional experience is more informative than labels or assumptions

Player reviews consistently described emotional outcomes that didn’t always align with surface expectations. Games that appeared similar could support very different emotional needs.

❯❯❯❯ How a game feels to play matters more than how it’s described.

04. “Negative” emotions often reflect intentional play, not failure

Stress, anger, fear, and intensity showed up frequently in reviews of meaningful games. These emotions often pointed to release, focus, or catharsis rather than dissatisfaction.

❯❯❯❯ Emotional intensity can be purposeful, not harmful.

02. Safety and filtering required human judgment

Potentially harmful or misleading titles were handled with clear blocking flags and manual removals when needed. This kept the dataset honest while protecting users from recommendations that didn’t belong.

❯❯❯❯ Ethical systems don’t hide data. They handle it responsibly.

03. Emotional support follows relief pathways

Players didn’t describe games as making them feel one emotion. They described what games did for them: calming them down, helping them vent, pulling attention away, or making them feel understood.

❯❯❯❯ Relief is about what games do, not named emotions

What This Changes

Players already use games as emotional tools.

GameRx makes that process visible, intentional, and safer by grounding recommendations in player language rather than trends or popularity.

Instead of asking “What should I play?”

GameRx starts with “How do I want to feel?”

Explore the Code Behind the Insights

Want to see how GameRx was built or try the interactive Streamlit app, from raw Steam reviews to a human-centered emotional relief framework?

The full project lives on GitHub, with each step documented, from data cleaning and emotion extraction to hybrid modeling, safety filtering, and app preparation.

The Streamlit prototype lets you experience how emotional relief pathways translate into real, usable recommendations.

Interactive app. Best viewed on desktop.